Contents

I saw part of a discussion recently which got me re-examining the notions of rotation and reflection. The motivating question could be paraphrased as:

Should the operation be viewed as a reflection or a rotation?

The answer is, of course, that it depends on what you mean by reflection and rotation. Oh, and by "".

In actual fact, it is the last of these that caught my eye because one of the participants in the discussion wanted the answer to also make sense for functions, which is where it all gets very interesting indeed.

1 Defining Terms

The right context for talking about rotations and reflections is geometry. As we're going to include functions, we have to be happy with vector spaces of arbitrary – even infinite – dimension. There's a nice anecdote about how to think of vector spaces of arbitrary dimension: think of ordinary –dimensional space and say "" to yourself in a very firm voice. It's often told with a slightly facetious tone of voice, but it's actually exactly what mathematicians do. So if you're happy with spaces like or but less so with the idea of an infinite dimensional space, simply think of and say "infinity" to yourself in a very firm voice.

Not really much more seriously, a vector is something that behaves like we think a vector ought to behave: namely, you can add, subtract, and scale them1. A vector space is a space of vectors that can all be added to and subtracted from each other – they live together in (relative) harmony. What is slightly weird if you haven't already encountered the notion is that functions are vectors: you can add them, subtract them, and scale them. There are various spaces of such functions, depending on what other conditions we want them to have – such as continuity – and all the usual ones are infinite dimensional.

1It may look like a duck, quack like a duck, but if it doesn't add, subtract, or scale like a duck, it isn't a duck.

To start with, though, we'll deal with finite dimensional spaces. We'll also assume that our spaces come equipped with an inner product, which allows us to talk about lengths and angles in a sensible fashion. So we start with a finite dimensional vector space equipped with the tools for lengths and angles. That's what "" is: a vector in this space.

We'll also need some terminology for the map . For a given vector space, let denote the identity transformation on that vector space. Then is .

So what about reflections and rotations? The first thing to note is that both are transformations that preserve lengths and angles2. Such transformations are called orthogonal. But within the space of orthogonal transformations, which are rotations and which reflections?

2One technicality here: we're only considering linear transformations which means that all our reflections and rotations also preserve the origin.

Imagine explaining reflections and rotations to someone who hadn't encountered them before. Rotation, you might say, is what happens when you turn. You start somewhere and turn round: that's a rotation. A reflection, on the other hand, just appears somewhere. There's no way to move yourself from your starting position to your reflection.

And that's the definition we'll use:

A rotation is an orthogonal transformation which can be obtained by a continuous path of orthogonal transformations starting at the identity.

A reflection is an orthogonal transformation which is not a rotation.

I should be clear here: this is the broadest definition of rotation and reflection. There are other definitions which you may encounter which are more restrictive. If you ever encounter someone who insists on using a more restrictive definition, just sprinkle this definition liberally with the word "generalised".

Using this definition, every orthogonal transformation is either a rotation or a reflection. It would be useful to have a way to quickly figure out which. I'm very much of the "down with determinants" school, but I can't deny that they are quite useful in this context.

In a finite dimensional vector space, an orthogonal transformation has determinant either or and is a rotation if , and is a reflection if .

Question 1 can then be answered because in a finite dimensional vector space of dimension , . Therefore it is a reflection if is odd and a rotation if is even.

2 Promotion to a Higher Plane

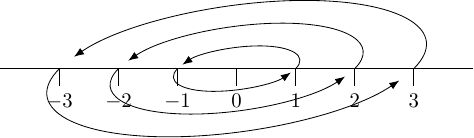

The original context was on numbers, which forms a –dimensional vector space. Therefore the answer to the original question is "reflection". But there is an argument to be made for "rotation", wherein the real line is rotated out of itself and back onto itself, as in Figure 1.

The key here is that we need an extra direction to rotate into. This can be generalised: if is a vector space then we can add a direction and "promote" reflections in to rotations in this bigger space. The notation for this bigger space is (think: plus one more direction). Formally, given an orthogonal transformation on , we define on by:

Then . So is always a rotation.

Thus providing we are happy to make our space a bit bigger, we can always think of as a rotation3.

3This "adding a dimension" technique is actually something quite important, particularly in Algebraic Topology. Sometimes we want to study what stays the same and what changes when we keep doing it. Stuff that "stays the same" is called stable, so we might be tempted to say that stably all orthogonal transformations are rotations, except that when done properly then the stabilisation of a transformation is obtained via which keeps reflections as reflections.

The story doesn't finish here, indeed we're only just getting started. We've seen that when we look at the original context then is a rotation or reflection depending on the dimension of the space, but that if we're willing to expand its context we can always force it to be a rotation.

The next stage is to focus on the distinction between merely knowing that is a rotation and actually seeing it. That is to say, if is a rotation then there is a path through orthogonal transformations from to , but how easy is it to find such a path? In the next sections we will examine this as that will help considerably when we turn to functions.

3 Finding the Path

There's a moment in every "coming of age" story where the protagonist is told to "visualise their goal and the path will become clear".

Sadly, that's not always true.

Imagine standing at the north pole and wanting to travel to the south pole. Even if we insist on travelling in a straight line at a constant speed, any direction will do. So there isn't a preferred choice of path from the north to the south.

It's the same with travelling from the identity transformation to (assuming we're in a space where that transformation is a rotation). The standard method of finding a path works as follows: pair up the coordinates and in each pair, apply a standard half turn rotation. So in a –dimensional space, the path matrix would look like:

The problem is that there are many ways of pairing up the coordinates, and indeed many ways of choosing the coordinates in the first place.

So whether we are in an even dimensional space to begin with, or promote our odd dimensional space to one, actually exhibiting as a rotation by finding a path is not a natural thing.

4 Doubling Up

There's another way to make odd numbers even, and it has the advantage that it also can be applied to even numbers and they stay even: doubling. Rather than adding a single extra dimension, we simply double all of what's there.

This still fits with the image of rotating the real line because is the same as , so doubling has the same effect as adding one to it, meaning that when generalising Figure 1 we should consider both strategies to see which is the "right" generalisation.

The advantage of doubling is that when pairing up directions, each of the original directions has a natural pair: its copy in the double. So if we denote a vector in the original by and its copy by , the rotation from to is given by:

The notation is deliberate: this is secretly complex numbers. Instead of regarding our vector space as a real vector space, we augment it to a complex one. This means that as well as scaling by a real scale factor, we can scale by a complex one. And since in the complex plane we can travel from to without passing , we can do so in any complex vector space.

So the notion of as a rotation yields a natural path towards complex numbers.

(In fact, in a complex vector space, there are no "reflections" by our criteria: every unitary4 transformation can be connected by a continuous path to the identity.)

4The generalisation of "orthogonal"

5 Complex Structure

Once we have introduced the concept of complex numbers, we can see that our first approach to thinking of as a rotation is also secretly introducing complex numbers into the mix.

Let's start with the simplest case: , otherwise known as the Euclidean plane. One way to view the process of picking a path of rotations from the identity round to is to imagine starting at and looking up the directions to in your favourite mapping app. The quickest route would be to head down the –axis, but that's not allowed because we have to stay the same distance from the origin throughout. So we have to travel around the circle. This gives us essentially two routes: clockwise and anticlockwise. Once we make that choice, we apply it to all starting points so that the path from the identity to sweeps all points round in the same direction.

Let's consider the transformation which maps a vector to the half-way point, and call this . That's half-way between and . In particular, starting at and travelling half-way round leads us to either or depending on the direction. The crucial property of this is that if we apply it twice we do the full rotation from to and so .

The next step is to think of as "multiplication by ". It allows us to make our Euclidean plane into the Argand plane by telling us how to multiply by .

This extends: in an even dimensional vector space, it is possible to find an operator such that and then this defines a path from to via:

The operator , and operators like it, are interesting enough to get their own name.

A linear transformation on a real vector space with the property that is called a complex structure.

So if we can find a complex structure, we can find a path from to and thus exhibit as a rotation.

6 The Story of Functions

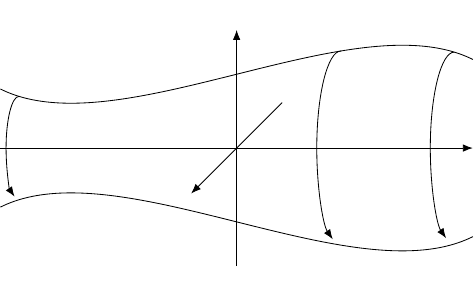

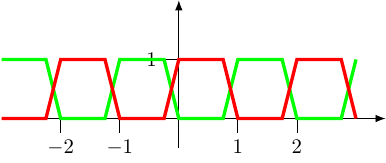

What really interested me in this was the almost postscript "What about functions?" tacked on to the end of the original discussion. A lot of the above story makes sense for functions, in particular the part about viewing real stuff as secretly embedded in complex stuff5. One commentator on the original discussion said that they viewed the rotation of to as being like rotating the graph about the –axis. In this setting, we no longer view as a function into but into and rotate it within , as in Figure 2.

5Complex stuff is often actually simpler than real stuff; I used to give a talk where I'd say "At this point, I want to complexify everything in sight to simplify it".

But the first part of the story is more interesting when it comes to function spaces. In that, we figured that would be a rotation in an even dimensional space and to exhibit it as such involves finding a complex structure.

There's good news and bad news. The good news is that infinity is even. The bad news is that there is a lot of our discussion which doesn't generalise well to infinite dimensions. As a simple, but ultimately not crucial, example, the notion of a "determinant" only makes sense for very special transformations on infinite dimensional spaces. And the transformation is not special in that sense.

More importantly, any finite dimensional real vector space can be made to look like without too much difficulty and once we know that it looks like we can easily pair up directions to define a complex structure (if is even). In infinite dimensions, spaces like are quite rare. And with function spaces, it can be easier to look for an operator directly rather than start with directions.

Having said that, some situations are easy. If we take all functions then we don't have any special properties so we can simply pick directions with wild abandon. At the other end of the spectrum, if we take square-integrable functions then this forms what is called a Hilbert space, and these are among the nicest possible spaces in existence6. Picking directions here is child's play, and once we have the directions chosen we can pair them up with impunity and know that everything will Just Work.

6Only superseded by nuclear spaces which have a much cooler name.

So the interesting cases lie somewhere in the middle of these. I think that a particularly intriguing one is that of continuous functions, and so that's what I'm going to look at now. I ought to be flinging words like "topology" around here (beyond that the functions are themselves continuous), but I won't: if you know, be assured that this works.

The space of continuous functions on is written . What we want to do is to make this into a complex vector space without adding anything to it (so in particular, not converting it to ). We'll do that by finding a complex structure with .

To motivate the next part, let us note that in , we also have choices of "real" and "imaginary" directions and the multiplication by takes the real to the imaginary and vice versa. So if we can pick out "real" and "imaginary" directions in we might be on to something. The "real" and "imaginary" must each be "half" of in that it should be possible to think of every function as being the unique sum of its "real" and "imaginary" parts7.

7Finding the real and imaginary directions is actually stronger than finding a complex structure, so if we can't do it then all might not be lost.

There's an almost obvious split that almost works: take functions that are non-zero only on positive numbers to be "real" and non-zero only on negative numbers to be "imaginary". Then we split a function into its "real" and "imaginary" parts by looking at its restriction to positive and negative numbers respectively.

The reason that this only almost works is because of that pesky point . If we have two functions, one which is zero for negative numbers and one that is zero for positive numbers, then by continuity, each is zero at and so their sum has to be zero at .

This means that this would work for the subspace of functions which are at . Given such a function , we can define its real and imaginary parts to be:

Then . The operator is defined in such a way that it takes a "real" function to the obvious "imaginary" one, and an "imaginary" one to the negative of its corresponding "real" one. If we define to be the sign of :

then is defined by:

As mentioned above, this only works for the space of functions that are zero at zero. This leaves us with a single left-over direction, which looks as though it puts us in the position of an odd-dimensional vector space from earlier. But fortunately, we can strip off dimensions from our "real" and "imaginary" to match up against this left-over direction.

There's an old Maths joke8 which explains why horses have an infinite number of legs: a horse, so the joke goes, has two hindlegs and forelegs. That makes six legs in total, which is an even number but is also a very odd number of legs for a horse. The only number which is odd and even is infinity, and therefore a horse has an infinite number of legs.

8And therefore not a very good one …

The relevance for us is that infinity is both odd and even, by which I mean that it can be divided into two (so is even) but also if I remove an element then it can still be divided into two (so is odd).

So we have to find a direction in one of our real or imaginary parts of to match with , let's say . But as we've removed it from the real part, we also need to remove its counterpart from the imaginary part so that the real and imaginary subspaces of match up, so we also need to separate out . But then we need to remove a direction to match with , let's say , and its counterpart, namely .

Continuing, we need to remove all of . By removing each point, we've remained balanced so our real and imaginary parts still match up. But by removing an infinite number of points we can now pair them up in a different fashion to provide a complex structure.

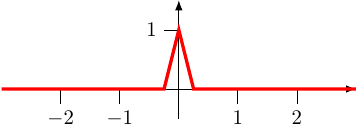

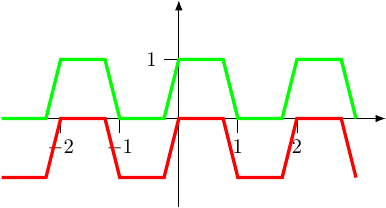

The last bit is to take an arbitrary continuous function and force it to be zero at the integers. To do this, we need a family of "bump" functions. That is, we need continuous functions9 with and away from . A specific example for is given in Figure 3 and we can take translates of this for the .

9In all that follows, to adapt the story to other classes of functions, such as smooth, all we need to do is pick suitable bump functions.

So for a continuous function , we start by subtracting suitable multiples of the to force it to be zero on :

This function can then be split into "real" and "imaginary" parts since it is, in particular, zero at zero.

The last step is to declare half of the to be "real" and half to be "imaginary". The simplest way is via the odd/even split. The full split for the original function is then:

This is a little bit messy. We can clean it up a little bit by noting that when we force a function to be zero at all integers, we are effectively splitting it into an infinite number of nice segments that can be separated and recombined without worrying about the continuity of the resulting function. Each segment relates to our original function on a particular interval of the form , so we have one for each integer. In our complex structure, we link the segment on with the segment on its negative counterpart, . But when we look at the values of the function on , we link with either or (depending on parity). So we have two different schemes in play for pairing up integers. Maybe if we settle for just one we might get a cleaner structure.

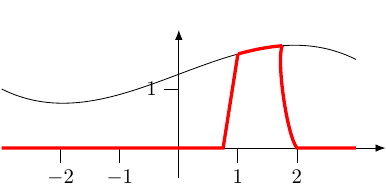

So for and , define by:

See Figure 4 for an example of what this looks like. This is determined by the values of only in the interval but maintains continuity.

Then we define:

And observe that .

The operator then takes to and to .

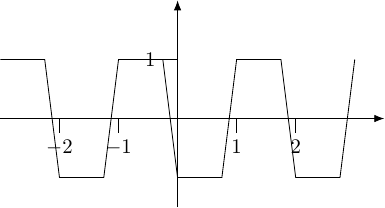

Let's end with the simplest example to see what's going on. Take the constant function , as in Figure 5.

In Figure 6, the red line is the real part of and the green line is the imaginary part.

Applying takes the red to the green, and the green to the negative of the red, as in Figure 7.

Recombining these results in the function shown in Figure 8.

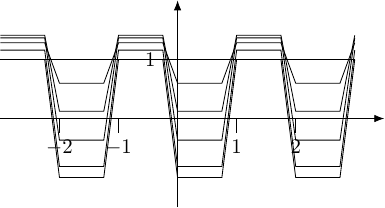

It can be illustrative to look at what happens at intermediate points, namely to look at . As the "trough" on the even intervals deepens, the "peak" on the odd intervals temporarily lifts so that when the trough is at , the peak is at its height of .

Finally, we have our answer: the operation is a rotation which can be viewed as taking place internally to the space of functions. In other words, the space of real continuous functions forms a complex vector space.